We’re all rushing to use smart technology, or “AI,” to make decisions in our businesses. We’re letting it help us decide who to hire, who gets a loan, and what insurance should cost. It’s powerful stuff, and it promises to make us faster and smarter.

But we need to have a serious talk. Because the moment we let a piece of software make a life-changing decision about a real person, we’ve stepped into a whole new world of responsibility.

What happens when it gets it wrong?

This isn’t some philosophical debate for the future; it’s a very real problem right now. Thinking about “ethics” isn’t about being nice; it’s about basic self-preservation. Getting this wrong can destroy your company’s reputation, tick off your customers, and land you in a world of legal trouble.

So, how do we use this incredible power without causing a disaster? It comes down to a few common-sense rules.

Rule 1: Get Some Adult Supervision

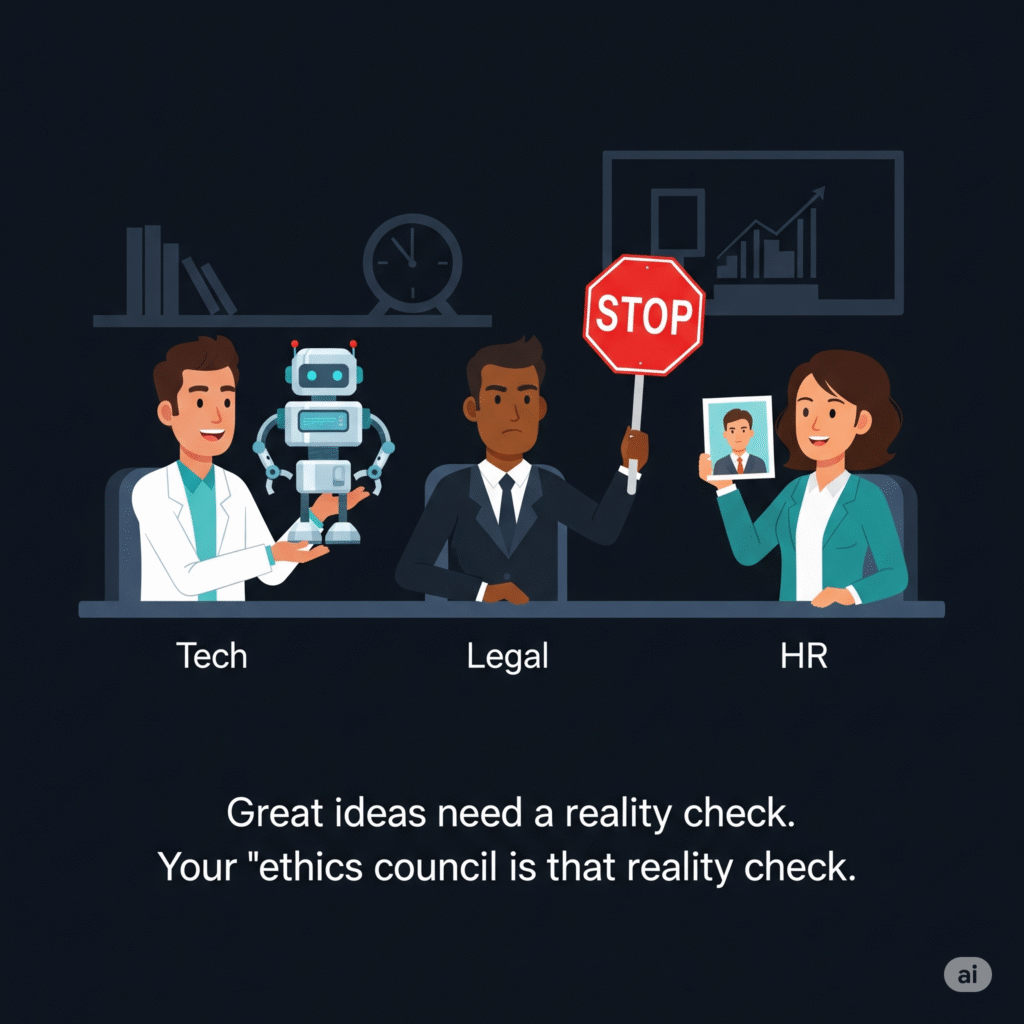

You can’t just let your data science team run wild in a lab. Before you even start building, you need to get a few different kinds of people in a room. Pull in someone from your legal team, someone from HR, a senior person from the department that will use the tool, and yes, your tech folks.

This group’s job is to act as the adult supervisors. Their first question should never be “Can we build this?” It has to be “Should we build this?” They set the rulebook and act as the referee, making sure that whatever you create is fair, safe, and makes sense for the business and its customers.

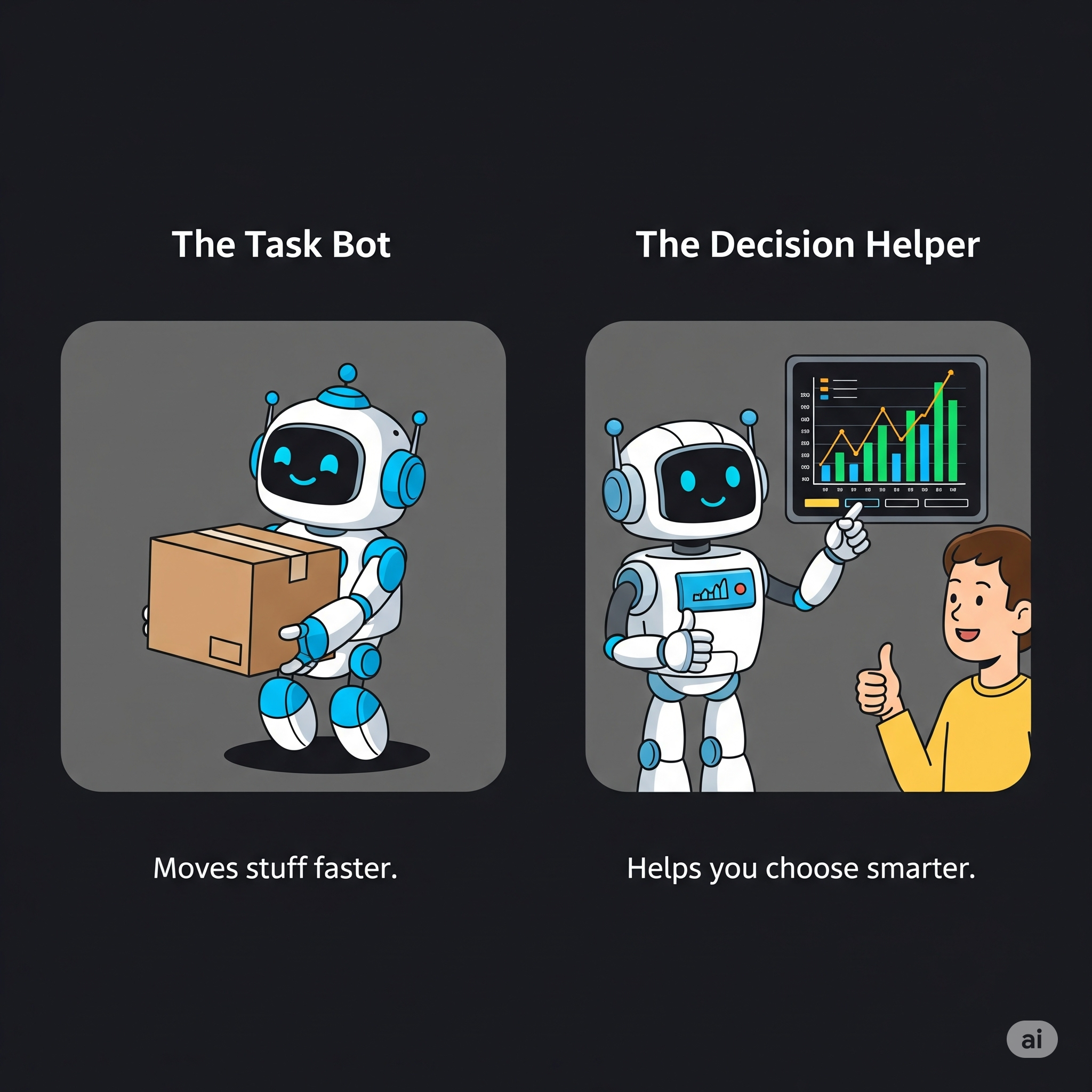

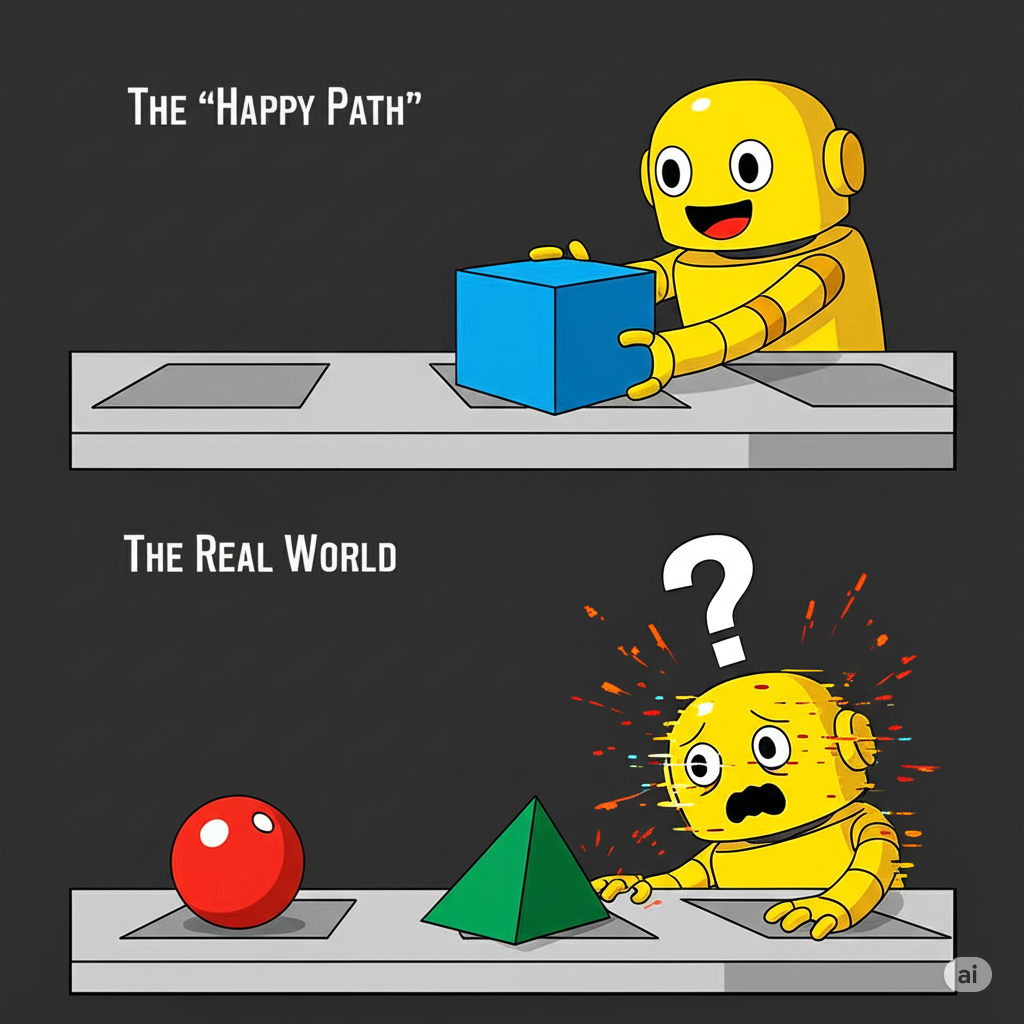

Rule 2: The Human Is Still the Pilot

This isn’t about robots taking over. Think of it more like a pilot and a smart co-pilot. The smart system (the co-pilot) can handle the routine flying, check all the instruments a thousand times a second, and manage the easy stuff. It can even recommend a course of action.

But when things get tricky—when there’s unexpected turbulence or a complex landing—the experienced human pilot takes the controls.

For your business, this means the system can approve the 95% of applications that are simple and straightforward. But for the 5% that are borderline, weird, or high-stakes, it automatically flags them and sends them to a human expert. The key is that it doesn’t just dump the problem; it gives the expert a neat summary of everything they need to know to make the final call. The human is still in charge.

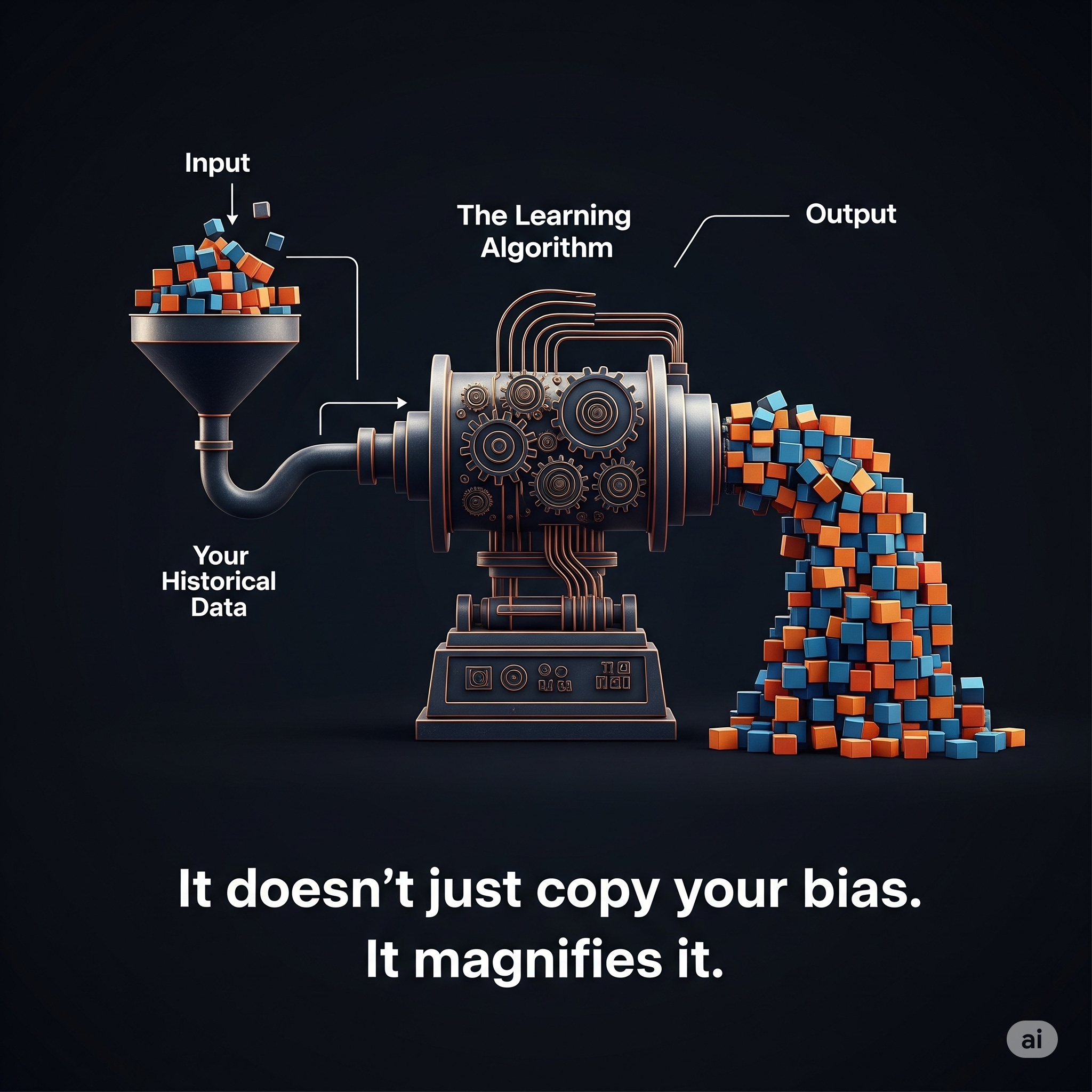

Rule 3: Your Tech Will Learn Your Bad Habits

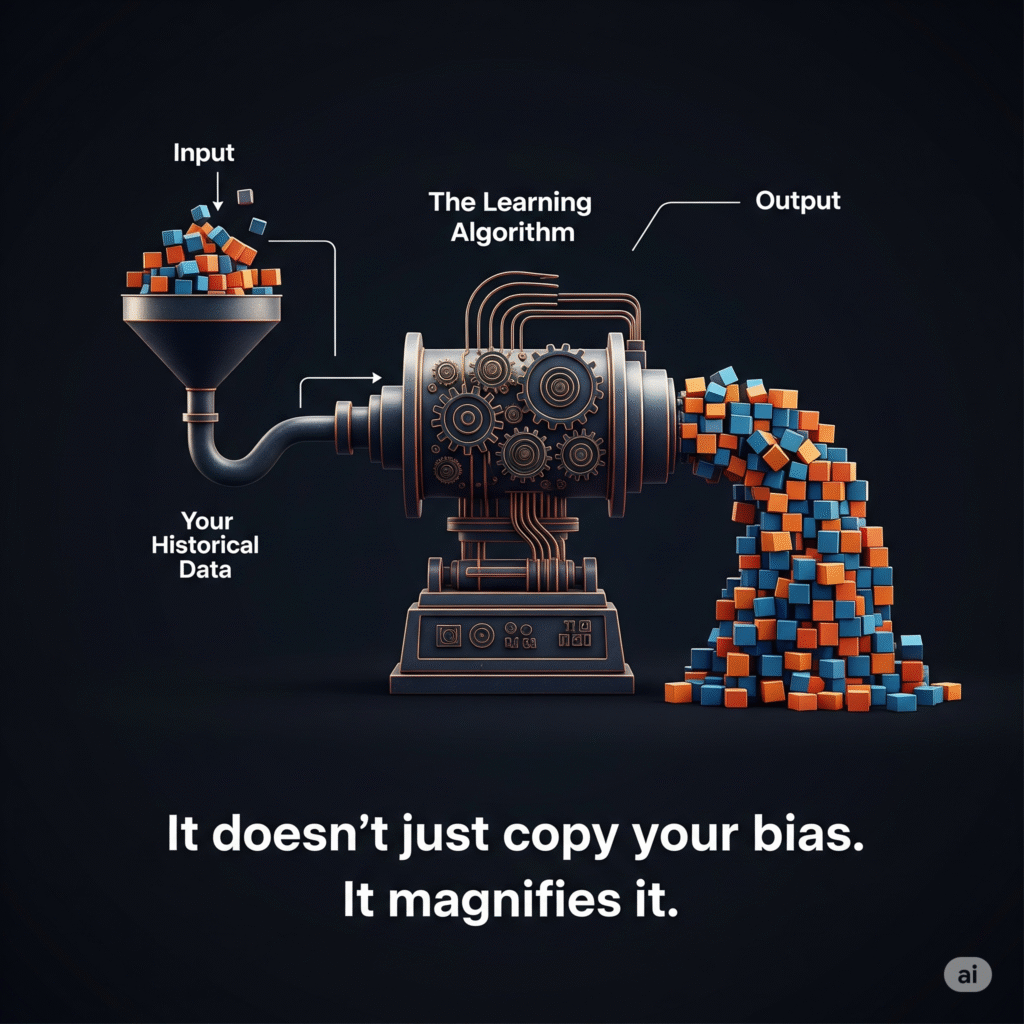

This is the big one. An automated system will learn from the data you feed it. And guess what? Your company’s historical data is probably full of unconscious human bias.

If you feed a hiring system 20 years of data where managers mostly promoted men, it’s going to learn that men are a better fit for leadership roles and start recommending them over equally qualified women. It won’t do this because it’s malicious; it will do it because that’s what you taught it. It will take your hidden bias and put it on steroids, making unfair decisions at lightning speed.

The only way to fight this is to check your data for these hidden biases before you ever start building. You have to actively clean up your data and design your system to be fair, otherwise you’re just automating discrimination.

Rule 4: If You Can’t Explain It, Don’t Do It

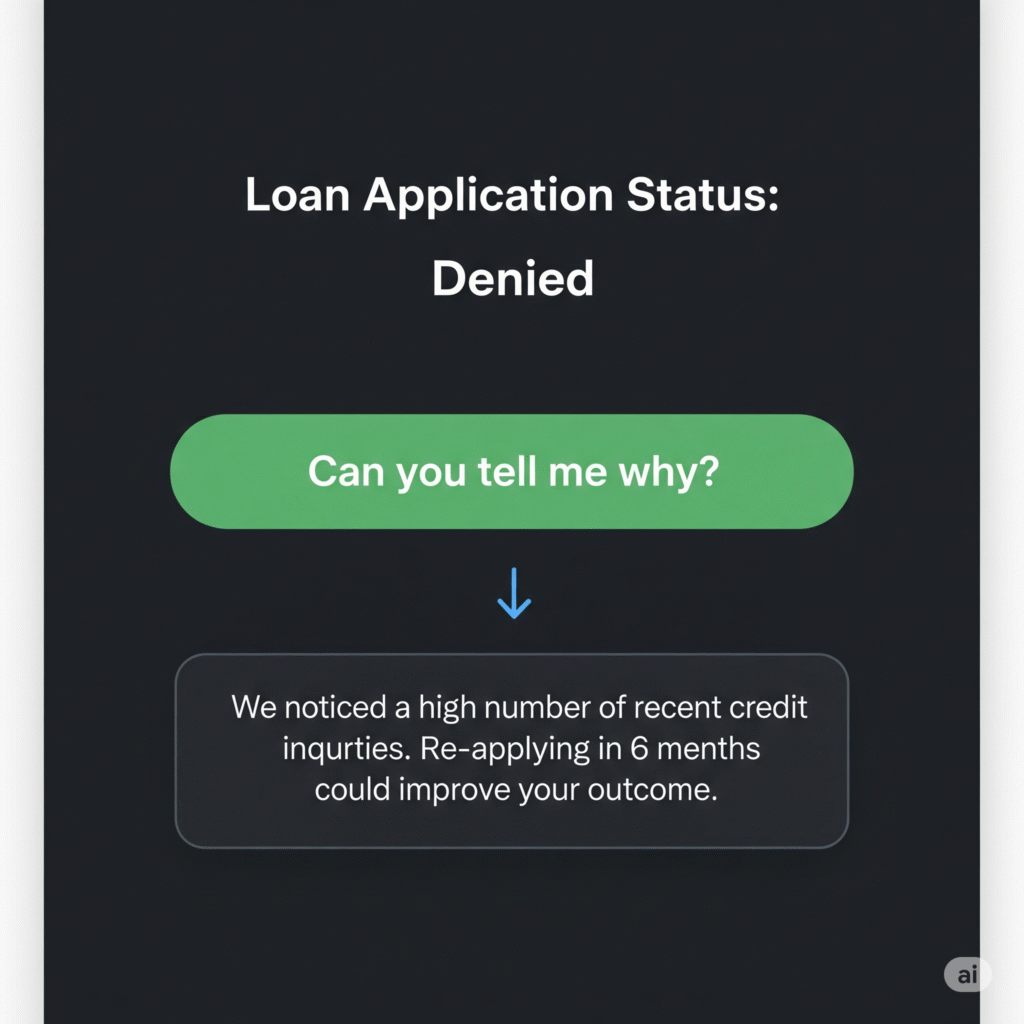

If your system denies someone a loan, you have to be able to tell them why. “The computer said no” is not an acceptable answer.

For any decision that has a real impact on someone’s life, the system needs a “Why?” button. It should be able to explain its reasoning in plain English. For example: “This loan was denied because of a high debt-to-income ratio.”

Better yet, it should be able to offer helpful advice: “To improve your chances, you could try reducing your total credit card debt.” This transparency builds trust and gives people a sense of control. If your smart system is just a mysterious black box, people will neither trust it nor tolerate it for long.

This Is No Longer Optional

Navigating all this isn’t just about “doing the right thing.” It’s about smart business. Governments are writing new rules for this stuff every day. Customers are getting wiser. And a single, high-profile mistake can cause damage that takes years to repair.

Building these systems responsibly isn’t a brake on innovation. It’s the only way to make sure you have a license to keep innovating in the future.